Media IP engineers fully designed, commissioned and maintained the resilient, scalable, high performance IP internetwork to connect all Discovery’s HD video, audio, communication and file-based resources within the International Broadcast Centre and to and across 13 games venues and Eurosport client sites for the Winter Olympics, PyeongChang, Korea in January and February 2018.

The IP network was fully operational and integrated with

- Live HDSDI (200+ paths) and file-based broadcast content delivery, intercoms and equipment control across 4x Eurosport IBC studios and 13 venues.

- Intercontinental Transmission links to Discovery’s Eurosport clients and libraries.

- MediaGrid and venue-based ingest services provided by EVS.

- IBC distribution and venue information services provided by OBS.

Venue-to-IBC IP infrastructure within Korea was provided by Olympic Broadcast Services (OBS) to all venues. Diverse managed trunks carried all broadcast, corporate ‘user’, streaming and control and coordination traffic.

Intercontinental transfer and transmission path services as well as WAN IP infrastructure for over 70+ HD feeds to European-based content presentation, transmission and archive facilities and 48 broadcast ‘markets’ was delviered by Globecast and tightly integrated with NEP’s LANs in the PyeongChang IBC.

Performance required:

- In excess of 99.999% uptime (‘5x9s’)

- Clearly prioritize IBC <-> Venue traffic types in the following QoS hierarchy:

- IP system management (routing protocols etc)

- Realtime high value streaming media traffic (2022-6/7, AoIP)

- SSH/Telnet configuration and monitoring

- Non-broadcast streaming (IPTV)

- Equipment control (SDI Matrix, RCPs, SIP, GUI configurators)

- Content file transfer (EVS Funnell)

- Network statistics gathering (SNMP and Netflow)

- Web browsing

- Discovery ‘corporate staff user’ traffic

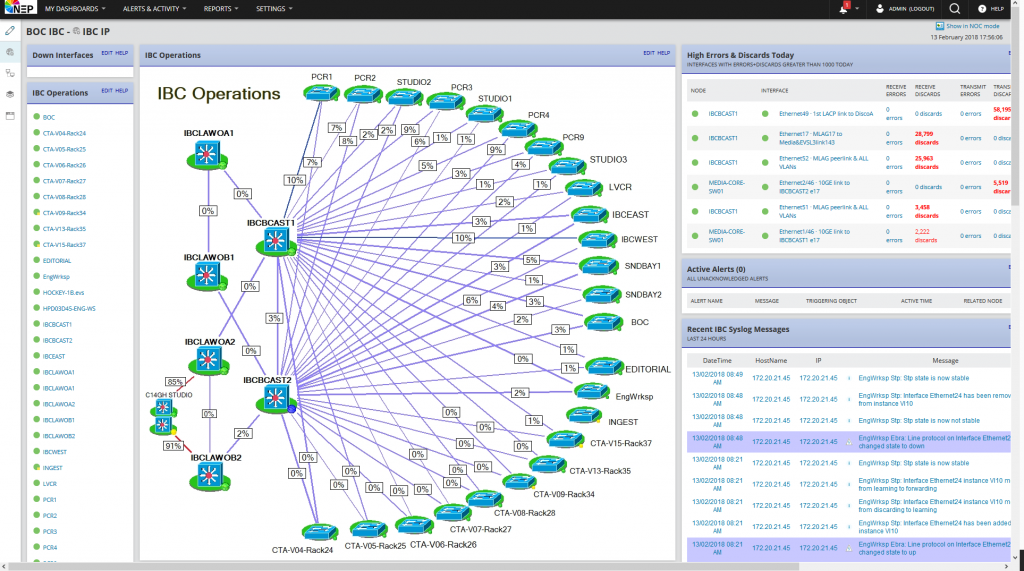

- Be simple to monitor and troubleshoot.

- Utilise all physical layer resources to provide BOTH resilience and load balancing.

- Use protocols and methods that are proven and understood.

- Integrate cleanly and efficiently with both the local LANs at each venue and the intercontinental WAN over to Discovery’s principal European transmission networks.

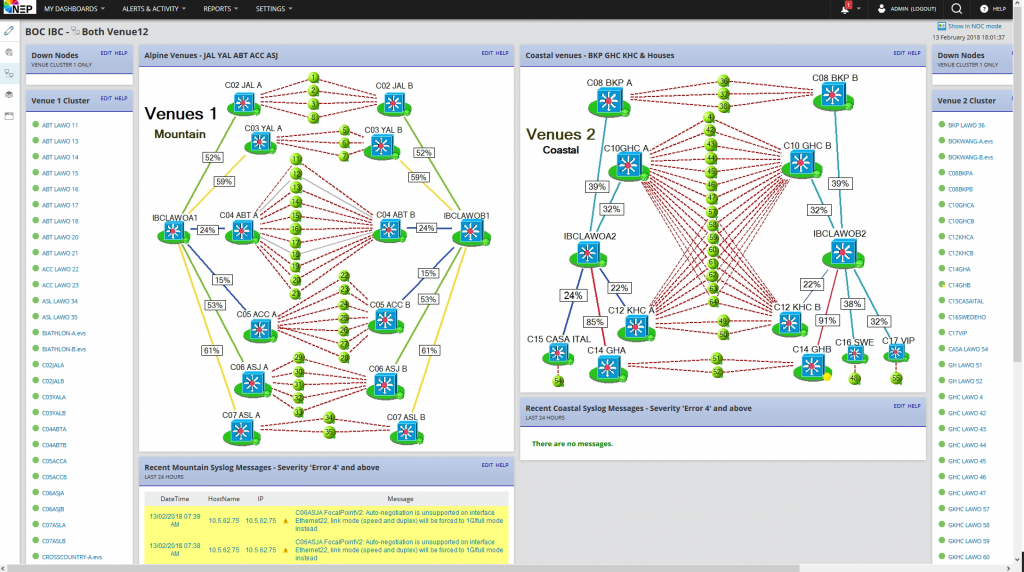

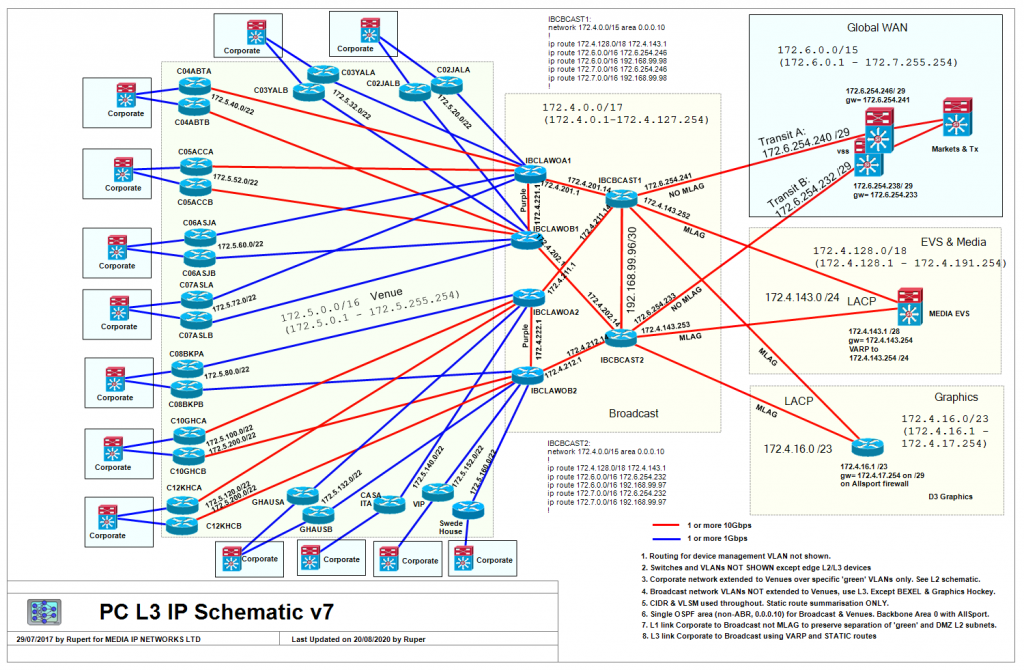

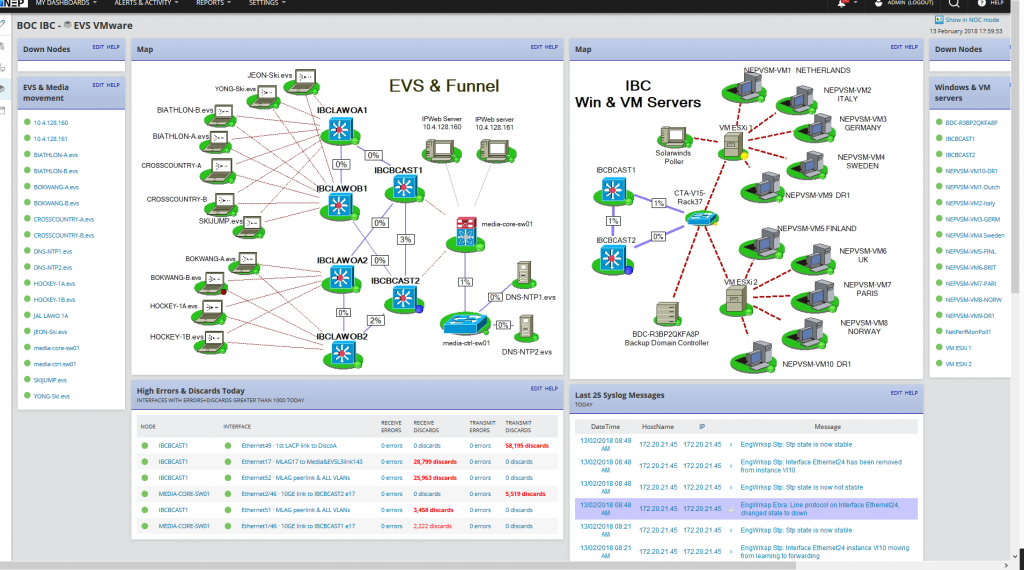

The IP architecture was based around proven and well understood collapsed core topology as below. Two powerful Arista 1750 L3 switches created the resilient switched core with full L3 routing across all the 90+ IBC LAN subnets although only 6 were directly connected to each and super-netting was used to avoid unwieldly routing tables. A further distribution layer of four 7150s downlink to the 13 connected venues, two each on both the A and B venue link sides as provided by Olympic Broadcast services. These 4 critical switches were interconnected in a grid topology using both link aggregation (as MLAG) and OSPFv3 equal path load balancing. No instability was detected by packet loss or path jitter during all of installation and operational use.

Supernetting has widely been implemented at the core and distribution levels. A few static routes were entered to avoid more complex link state calculations of the core switches and avoid ‘rogue’ configs pulling down the core’s routing table.

OSPFv3 is an advanced link-state routing algorithm and when configured with short LSA timers and dynamic, near-equal path costs provided proportional load balancing as well as full, automatic failover resilience. This proven technique quietly doubled WAN capacity using fully implemented backup paths to effectively deliver 2x1Gbps (or 2x10Gbps) provision at L3 although resilience then fell back on effective QoS for critical traffic if a link is compromised and traffic levels were in excess of a single link.

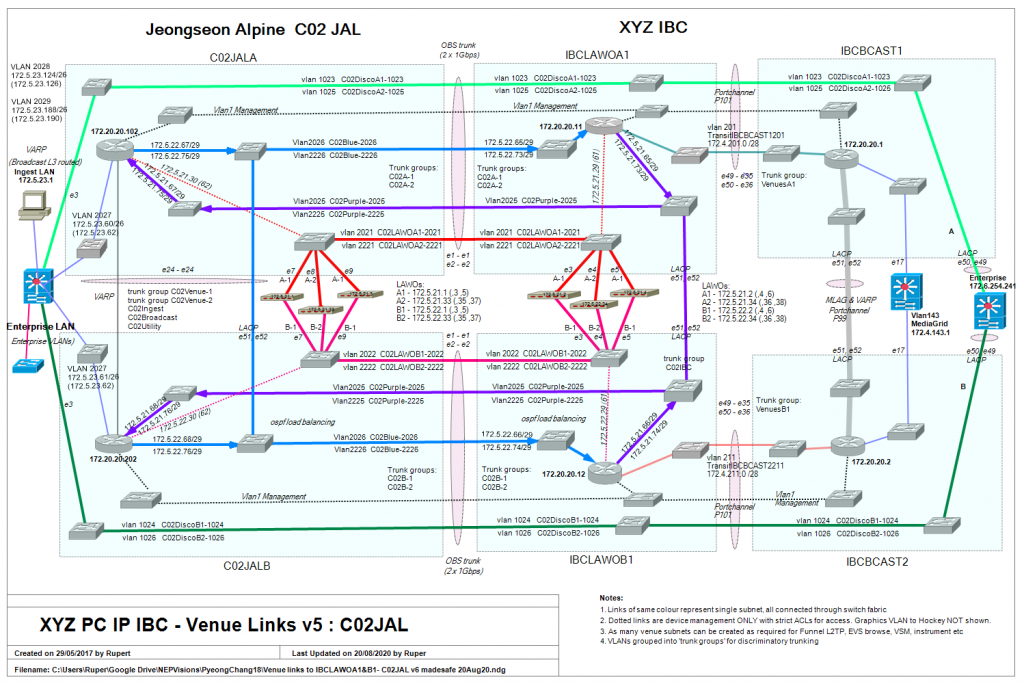

All of the remote terminations (venues) at the end of each spoke followed the exact same design with custom changes made for IP addressing and additional services/subnets as required. All remote subnets were gatewayed through resilient, load balancing and QoS’ing Arista 7150 L3 switches with very similar and proven configurations supporting OSPF, MLAG and VARP for all remote services, whether implemented or not. So as well as direct access uplinks for the 34+ venue LAWO Rem4s codecs, they were the principal distribution to secondary access switches for non-2022 VLANs such as VSM, Ravenna, Ingest, Riedel (Cisco, Netgear).

Across the whole estate was a strict condition of each and every subnet existing on a single VLAN with a single gateway. Per VLAN Spanning tree (PVST) is deployed in every VLAN with no STP performance degradation since each VLAN was limited to a maximum of 4 switches with the exception of corporate end-end traffic. Portfast was universally applied and no STP instability was seen across syslogs from any switch even with MLAG interlinks. There were no VLAN loops and Spanning Tree was NOT used to provide any failover mechanism. VLAN trunking was deployed throughout with central, simple supernetted L3 IP routing in the collapsed core providing a stable internetwork, working end-end at line speed for all services including EVS, Graphics and operational TV surfaces.

Venue to venue traffic was fully implemented including 12+ broadcast HD camera feeds between the Ice Hockey venues as IP JPEG2000 streams over the protected XYZ/OBS infrastructure as required, notwithstanding security issues. Similarly, all broadcast IP sources at every venue were fully available at all IBC and Europe-wide transmission centres.

Media IP networks commissioned a VMware ESXi6.7 server and populated with 10x Windows 10 VMs. A working, secure and reliable Windows10 VM with the VSM application was trialled and replicated 11 times to create the battery. Remote broadcasters in Europe and at the venues used regular MS Terminal Services clients (MS native, Mobexterm, VNC) to remotely control a strictly allocated and firewalled VM instance to select their IBC sources to their WAN links for Tx. Confidence was high that these VMs were reliable and effective. Apparently these VMs have been used to change sources on air from the broadcaster’s galleries in Europe